Child Abuse Victim Urges Elon Musk to Remove Exploitative Content From X

A survivor of child sexual abuse has made an emotional plea to Elon Musk, asking him to take stronger action against the circulation of child exploitation material on his social media platform, X.

The woman, who uses the pseudonym Zora, told the BBC that images of her abuse first recorded more than 20 years ago are still being traded and sold online.

“Hearing that my abuse, and the abuse of so many others, is still being circulated and commodified here is infuriating,” Zora said.

“Every time someone shares or profits from this material, they fuel the original trauma.”

X’s Response

In a statement, X said it has “zero tolerance for child sexual abuse material” (CSAM) and that combating such exploitation remains a “top priority.” However, the BBC investigation discovered accounts openly advertising abusive content on the platform.

One account, linked to a trader in Jakarta, Indonesia, was found offering thousands of illegal photos and videos for sale. Investigators made contact via Telegram and traced payments to a local bank account.

A Lifetime of Trauma

Zora revealed she was abused by a family member as a child. The images from that period have become infamous among online abusers who continue to trade them decades later.

“My body is not a commodity. It never has been, and it never will be,” she said.

“Those who distribute this material are not bystanders—they are complicit perpetrators.”

The Scale of the Crisis

The global market for child sexual abuse material is worth billions of dollars, according to research by Childlight, the Global Child Safety Institute.

In the United States alone, the National Center for Missing and Exploited Children (NCMEC) received over 20 million reports of CSAM from tech companies in 2024. These reports are passed on to law enforcement, but experts say the sheer volume makes the fight overwhelming.

Abusive Content Moving Into the Open

What makes the problem more alarming is that images once confined to the “dark web” are now surfacing on mainstream platforms. In the case of Zora, content linked to her abuse is being promoted openly on X through accounts advertising links to Telegram.

Hacktivist groups such as Anonymous have been monitoring the issue and claim the trade is “as bad as ever.” One member pointed the BBC to an account whose bio contained coded language and emojis signaling access to exploitative material. The profile photo showed a child’s face—though the image itself was not explicit, the intent was clear.

A Call for Stronger Action

Campaigners argue that while platforms like X, Meta, and Telegram have made public commitments to fight CSAM, enforcement remains inconsistent. The persistence of such content raises questions about detection methods, reporting systems, and accountability for tech leaders.

For victims like Zora, every new reposting of their images represents a revictimization.

“Survivors like me are forced to relive the trauma,” she said. “It should not be happening in 2025. Platforms must do more.”

Moving Forward

Experts suggest that better use of AI detection tools, stricter moderation policies, and international cooperation between tech companies and law enforcement are essential steps. However, until real progress is made, survivors like Zora will continue to battle not only their past abuse but the endless digital echoes of it.

Read more: Child Abuse Victim Urges Elon Musk to Remove Exploitative Content From X- Singles Spot – Child Safety Standards

- Singles Spot — Terms & Information

- Privacy Policy for Singles Spot

- Israel To Present Highest Civilian Honour To Trump

- Naira Strengthens as Forex Inflows Boost Market Confidence

- BBNaija Season 10 housemates visit PZ Cussons

- AfDB to Lend Nigeria $500 Million in Budget Support Amid Ongoing Reforms

- Privacy Policy for Status Saver App

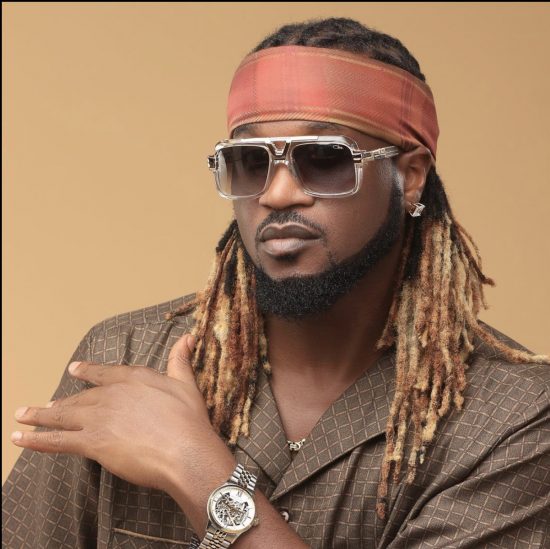

- I’ll Find You Anywhere: Rudeboy Warns Accuser in Explosive X Post

- FBI Announces ₦14m Bounty for Nigerian Man Wanted Over Fraud Charges

- Panic as Two-Storey Building Collapses in Lagos

- Tinubu Urges UN Reform, Nigeria’s Permanent Seat, and Fair Mineral Benefit at UNGA 80

need a video? video equipment rental in italy offering full-cycle services: concept, scripting, filming, editing and post-production. Commercials, corporate videos, social media content and branded storytelling. Professional crew, modern equipment and a creative approach tailored to your goals.

Продажа тяговых https://faamru.com аккумуляторных батарей для вилочных погрузчиков, ричтраков, электротележек и штабелеров. Решения для интенсивной складской работы: стабильная мощность, долгий ресурс, надёжная работа в сменном режиме, помощь с подбором АКБ по параметрам техники и оперативная поставка под задачу

Продажа тяговых https://ab-resurs.ru аккумуляторных батарей для вилочных погрузчиков и штабелеров. Надёжные решения для стабильной работы складской техники: большой выбор АКБ, профессиональный подбор по параметрам, консультации специалистов, гарантия и оперативная поставка для складов и производств по всей России

Официальная торговая платформа на кракен маркетплейс с escrow системой для защиты интересов покупателей и продавцов

Продажа тяговых ab-resurs.ru аккумуляторных батарей для вилочных погрузчиков и штабелеров. Надёжные решения для стабильной работы складской техники: большой выбор АКБ, профессиональный подбор по параметрам, консультации специалистов, гарантия и оперативная поставка для складов и производств по всей России

Продажа тяговых faamru.com аккумуляторных батарей для вилочных погрузчиков, ричтраков, электротележек и штабелеров. Решения для интенсивной складской работы: стабильная мощность, долгий ресурс, надёжная работа в сменном режиме, помощь с подбором АКБ по параметрам техники и оперативная поставка под задачу